Product Features

-

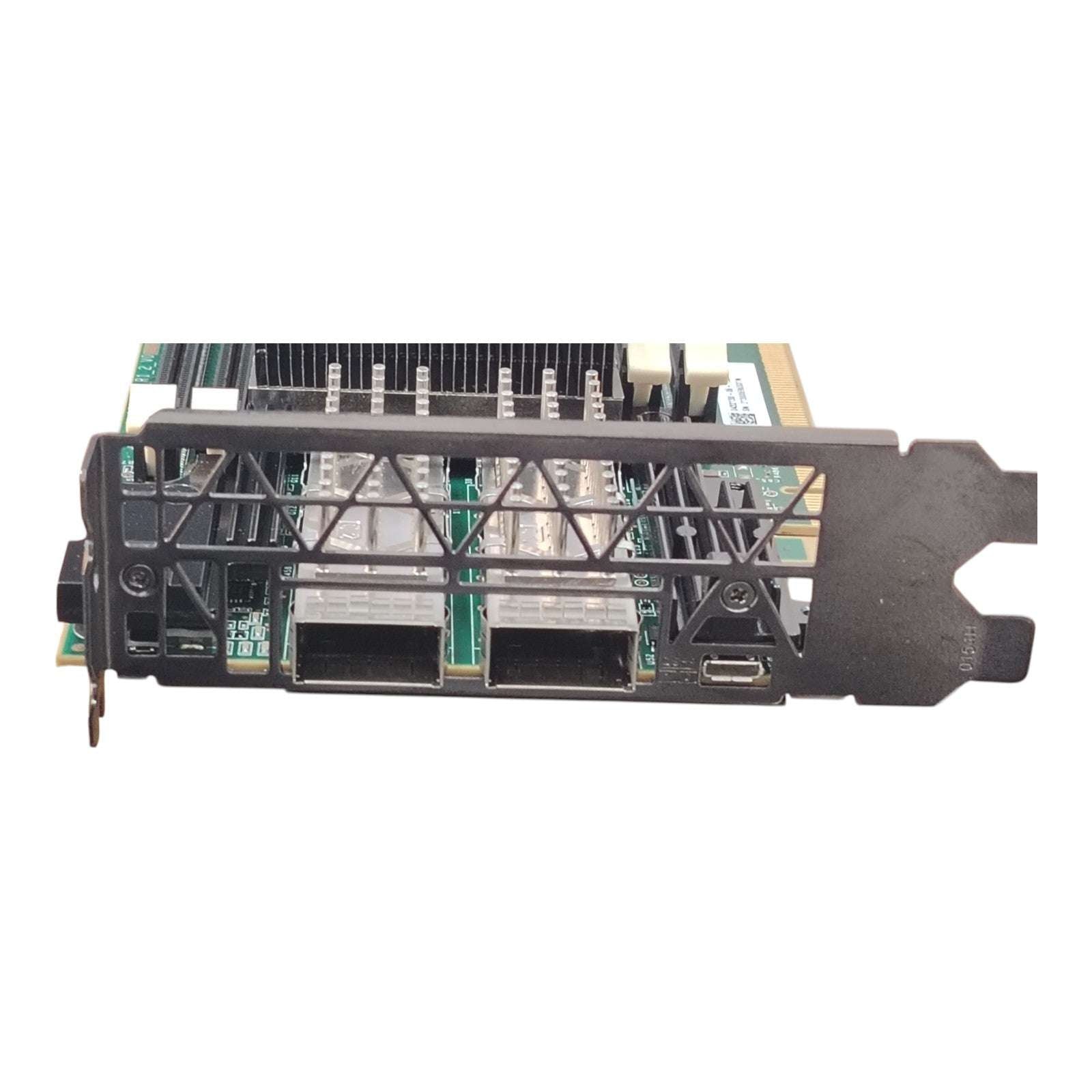

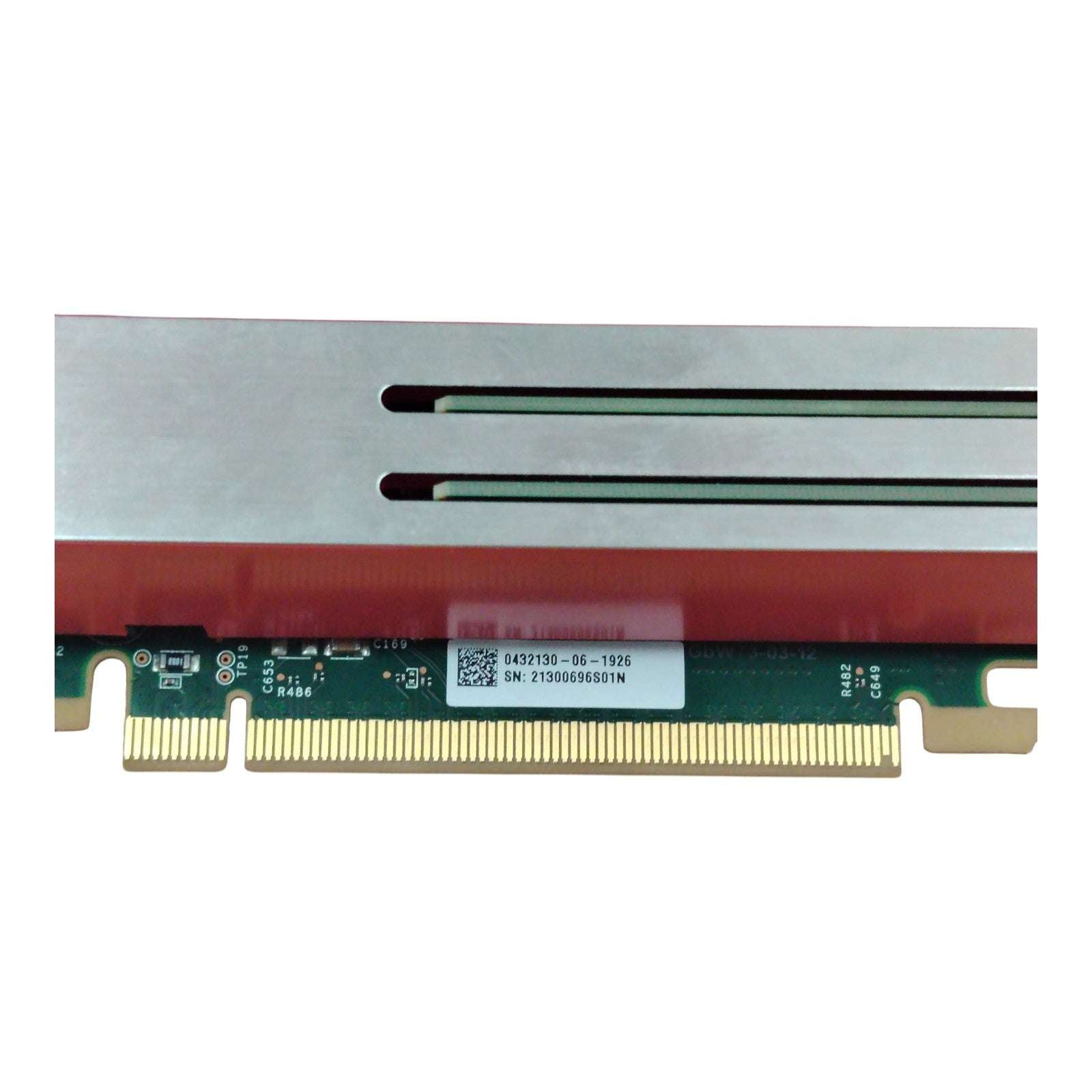

Supports 8 × Xilinx Alveo A-U200 PCIe accelerators for AI/ML and FPGA-based workloads.

-

Dual Intel Xeon E5-2620 v4 CPUs with 16 cores total and hyper-threading for parallel computing.

-

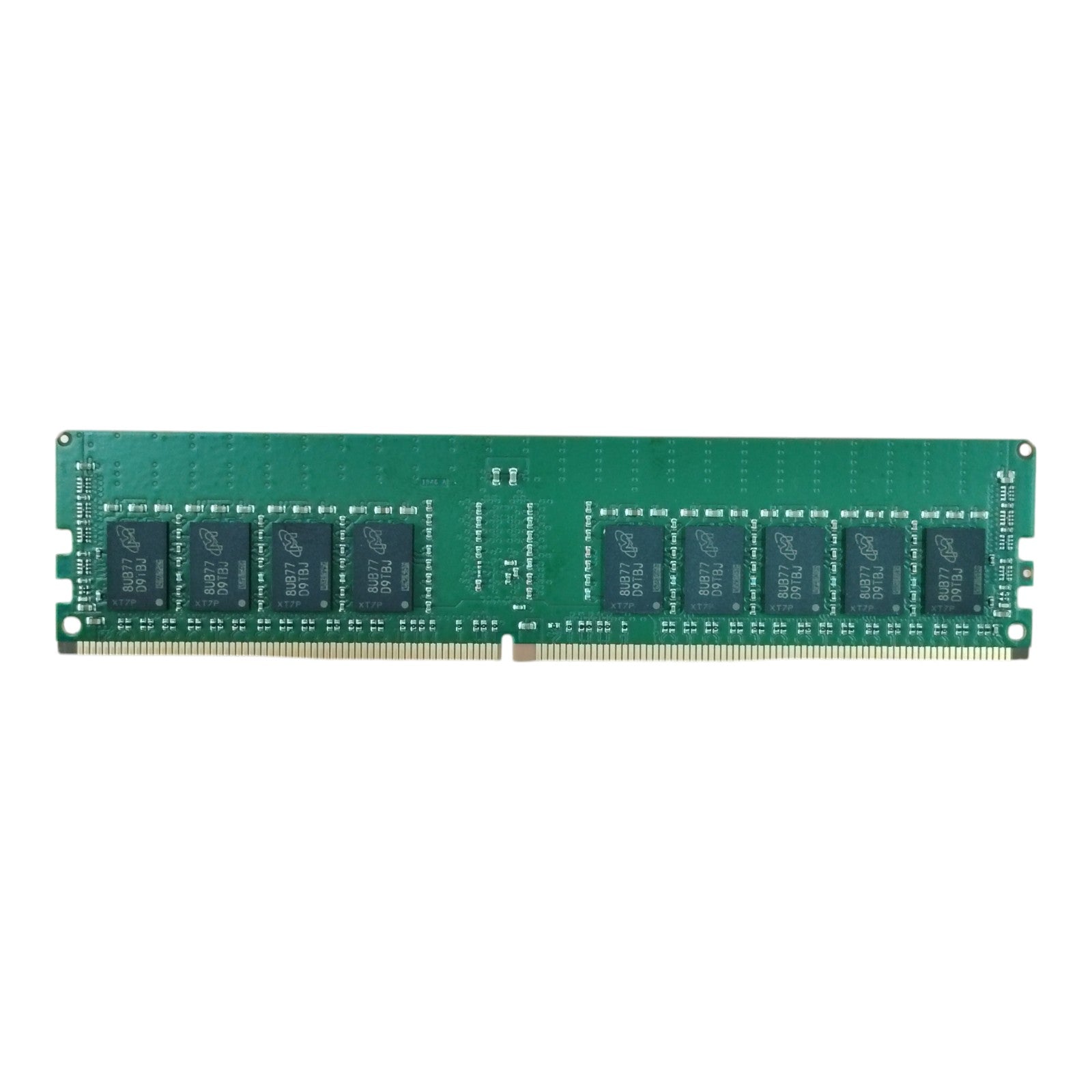

64GB dedicated GPU memory per card (via 4 × 16GB Micron DDR4 ECC modules per GPU).

-

4U rackmount form factor designed for data centers and HPC clusters.

-

Redundant cooling and power for continuous high-load operations.

-

PCIe Gen3 architecture ensures high bandwidth and low latency data throughput.

-

Ideal for AI training, inference, machine learning, financial modeling, and big data acceleration.

General Product Information

The ASUS ESC8000 G3 GPU Server is an enterprise-grade computing powerhouse engineered to handle AI, machine learning, and HPC workloads at scale. Featuring 8 × Xilinx Alveo A-U200 PCIe-based accelerators, this system is purpose-built for organizations that demand massive parallelism, high throughput, and ultra-low latency.

Powered by dual Intel Xeon E5-2620 v4 processors, the server delivers 16 cores and 32 threads of compute power. Combined with 64GB of dedicated FPGA-accelerated memory per GPU (using Micron DDR4 ECC modules), it ensures performance consistency across the most demanding AI/ML models.

A Samsung 250GB SSD provides reliable OS and application storage, while redundant hot-swappable power supplies and high-performance cooling ensure maximum uptime in enterprise environments.

With its 4U rackmount design, the ASUS ESC8000 G3 integrates seamlessly into HPC clusters, research facilities, and enterprise data centers. Designed for FPGA-accelerated AI and ML workloads, it outperforms traditional GPU-only systems in tasks like real-time inference, data analytics, and high-frequency trading simulations.

Technical Specifications Table

| Category | Specification |

|---|---|

| Brand | ASUS |

| Model | ESC8000 G3 |

| Form Factor | 4U Rackmount |

| GPUs | 8 × Xilinx Alveo A-U200 PCIe Accelerators |

| GPU Memory | 4 × Micron 2400T 16GB DDR4 ECC per card |

| CPUs | 2 × Intel Xeon E5-2620 v4 (8-Core, 2.1GHz) |

| Total CPU Cores | 16 cores / 32 threads |

| System Memory | Expandable (DDR4 ECC Registered) |

| Storage | 1 × Samsung 250GB SSD |

| Expansion Slots | PCIe Gen3 x16 slots |

| Networking | Compatible with enterprise 10GbE / 25GbE adapters |

| Power Supply | Redundant hot-swappable PSU |

| Cooling | High-efficiency redundant fans |

| Best Use Cases | HPC, AI, ML, deep learning, financial analytics, big data |

Compatible Products

-

NVIDIA or AMD GPU servers for hybrid compute clusters

-

Dell EMC, NetApp, or Pure Storage SAN/NAS solutions

-

10/25/40GbE networking switches (Cisco, Arista, Mellanox)

-

AI/ML frameworks (TensorFlow, PyTorch, Caffe, MXNet)

-

Operating systems: Ubuntu, RHEL, SUSE, Windows Server, VMware ESXi

Ideal Use Cases

-

AI/ML training and inference clusters

-

HPC research and supercomputing workloads

-

Financial modeling & high-frequency trading

-

Genomics and bioinformatics data processing

-

Big data analytics and cloud AI acceleration

Comparison Angle

The ASUS ESC8000 G3 stands out by combining FPGA acceleration with enterprise-class compute power. While most GPU servers rely solely on GPUs, this configuration with 8 × Xilinx Alveo A-U200 cards enables FPGA-accelerated AI, ML, and HPC workloads with superior energy efficiency and parallelism. Compared to previous generations, it offers higher PCIe bandwidth, advanced redundancy, and enhanced scalability for large-scale deployments.